Introduction

Robotics developer Figure garnered attention and acclaim recently by showcasing a video demonstration of its inaugural humanoid robot engaging in real-time dialogues, facilitated by the generative artificial intelligence furnished by OpenAI.

Enabling Conversations with OpenAI

Figure declared on Twitter that with the integration of OpenAI technology, its robot, known as Figure 01, now possesses the capacity to conduct comprehensive dialogues with individuals. This milestone highlights the robot’s adeptness in comprehending and promptly responding to human interactions.

The collaboration with OpenAI has empowered Figure to equip its robots with advanced visual and linguistic intelligence, paving the way for swift, precise dexterous actions by the robots.

Interaction with Figure 01

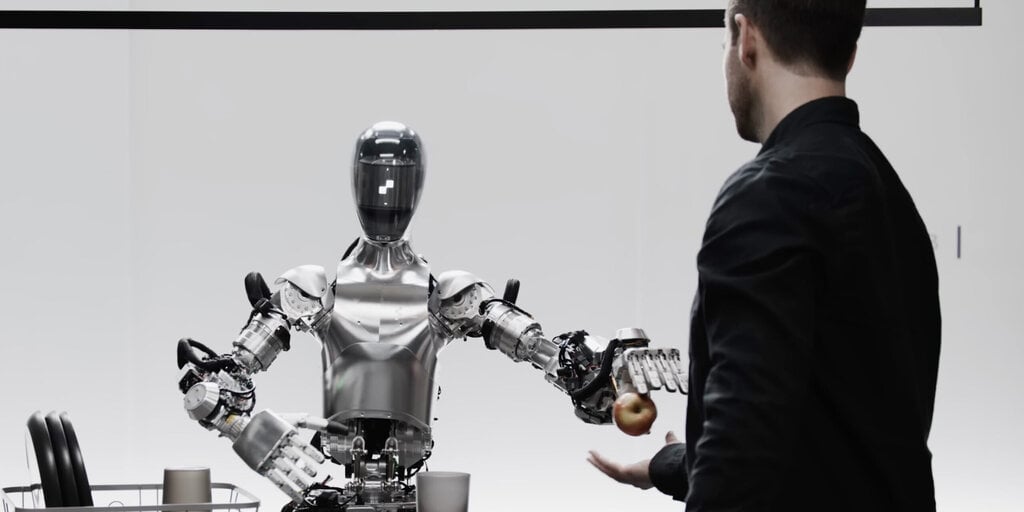

In the demonstration video, Figure 01 engages with Corey Lynch, the Senior AI Engineer who designed the robot. Lynch orchestrated a series of tasks within a simulated kitchen setting, prompting Figure 01 to identify items such as an apple, dishes, and cups.

When asked to provide food, Figure 01 correctly identified the apple. Lynch then guided the robot to dispose of waste into a bin while simultaneously posing questions, showcasing the robot’s multitasking capabilities.

Project Insights from Corey Lynch

Corey Lynch took to Twitter to delve further into the Figure 01 project, detailing the capabilities that the robot now possesses. Lynch emphasized that Figure 01 can articulate its visual perceptions, plan future actions, recall past experiences, and elucidate its decision-making process verbally.

Lynch expounded on the process of feeding images captured by the robot’s cameras and transcribing speech recorded by onboard microphones into a comprehensive multimodal model trained by OpenAI. Multimodal AI encompasses artificial intelligence proficient in understanding and generating various types of data, including text and images.

Technical Operation of Figure 01

Lynch elucidated that Figure 01’s behavior is acquired through learning, operates at standard speed, and is not controlled remotely. He outlined the model’s capacity to evaluate the entirety of the conversation history, including past visuals, to formulate verbal responses, subsequently spoken to the human interlocutor via text-to-speech.

Lynch underscored that the same model also determines the appropriate learned behavior to execute on the robot to fulfill commands, employing specific neural network weights on the GPU and executing a policy accordingly.

Comprehension and Decision-Making

Figure 01 is designed to succinctly describe its environment and exercise common sense in decision-making processes. The robot can interpret ambiguous statements, such as hunger, and translate them into actions, like offering an apple, all while elucidating its rationale for each action undertaken.

Response and Feedback

The inaugural demonstration of Figure 01 prompted a fervent response on Twitter, with many individuals astounded by the robot’s capabilities. Numerous users expressed admiration for Figure 01, viewing it as a significant advancement towards the singularity.

One Twitter user humorously referenced the Terminator movie franchise, while another quipped about locating John Connor expeditiously.

Technical Insights from Corey Lynch

Corey Lynch provided additional technical details for AI developers and researchers, outlining the neural network visuomotor transformer policies that propel all behaviors in Figure 01. These networks translate onboard images into 24-DOF actions, including wrist poses and finger joint angles, at a rapid rate of 200hz.

Implications of Figure 01’s Debut

Figure 01’s impactful debut arrives amidst discussions among policymakers and global leaders regarding the integration of AI technologies into mainstream applications. While the focus has largely centered on large language models, developers are also exploring ways to imbue AI with physical humanoid robotic capabilities.

Figure AI and OpenAI have not yet responded to requests for comment from Decrypt.

Industrial Engineering Professor Ken Goldberg from UC Berkeley highlighted the utilitarian objectives pursued by AI developers like Elon Musk, particularly in the domain of space exploration, where robots with sophisticated AI can offer valuable assistance.

Conclusion

Amidst the convergence of AI and robotics, Figure’s collaboration with OpenAI to develop Figure 01 represents a significant leap forward in the realm of human-robot interactions. The capabilities showcased by Figure 01 underscore the ongoing progress in AI technology and its integration into tangible, real-world applications.

Image/Photo credit: source url