Utilizing Large Language Models to Revolutionize Robotics Training

A recent study conducted by researchers at Nvidia, the University of Pennsylvania, and the University of Texas, Austin, has shed light on a groundbreaking technique named DrEureka. This innovative method leverages Large Language Models (LLMs) to expedite the training of robotics systems in a manner that exceeds human capabilities. The study introduces DrEureka as a domain randomization technique that can automatically generate reward functions and randomization distributions for robotics systems, based on a high-level description of the target task.

The Significance of Sim-to-Real Transfer in Robotics

One of the primary challenges faced in the field of robotics is the sim-to-real transfer gap, which refers to the disparity between simulated and real-world environments. Traditionally, policies for robotics models are trained in simulated environments and then deployed in real-world settings, requiring manual adjustments to optimize performance. However, recent advancements in LLMs have demonstrated the potential to bridge this gap effectively by combining vast world knowledge with reasoning capabilities to learn complex low-level skills.

While LLMs can design reward functions necessary for robotics reinforcement learning systems, the manual tweaking required to transfer policies from simulation to reality remains a time-consuming task. This is where DrEureka steps in to automate this process and optimize domain randomization parameters.

The DrEureka Approach

DrEureka builds upon the Eureka technique introduced in 2023, which utilizes LLMs to generate reward functions for robotic tasks. The key innovation of DrEureka lies in its ability to automatically configure domain randomization parameters to account for real-world unpredictability. By randomizing physical parameters in the simulation environment, the RL policy can generalize to various perturbations encountered in real-world scenarios.

Unlike Eureka, which focuses on training RL policies in simulations, DrEureka’s automated domain randomization significantly reduces the need for manual adjustments during the sim-to-real transfer process. By allowing LLMs to tackle the complexity of designing DR parameters with their profound grasp of physical knowledge, DrEureka streamlines the optimization of reward functions and domain randomization configurations concurrently.

Validating DrEureka’s Efficacy

The efficacy of DrEureka was evaluated on quadruped and dexterous manipulator platforms, showcasing its versatility across diverse robotic tasks. Results indicated that policies trained using DrEureka outperformed human-designed systems significantly in various real-world scenarios. Noteworthy achievements include a 34% increase in forward velocity and a 20% improvement in distance traveled in quadruped locomotion, as well as a 300% enhancement in cube rotations in dexterous manipulation.

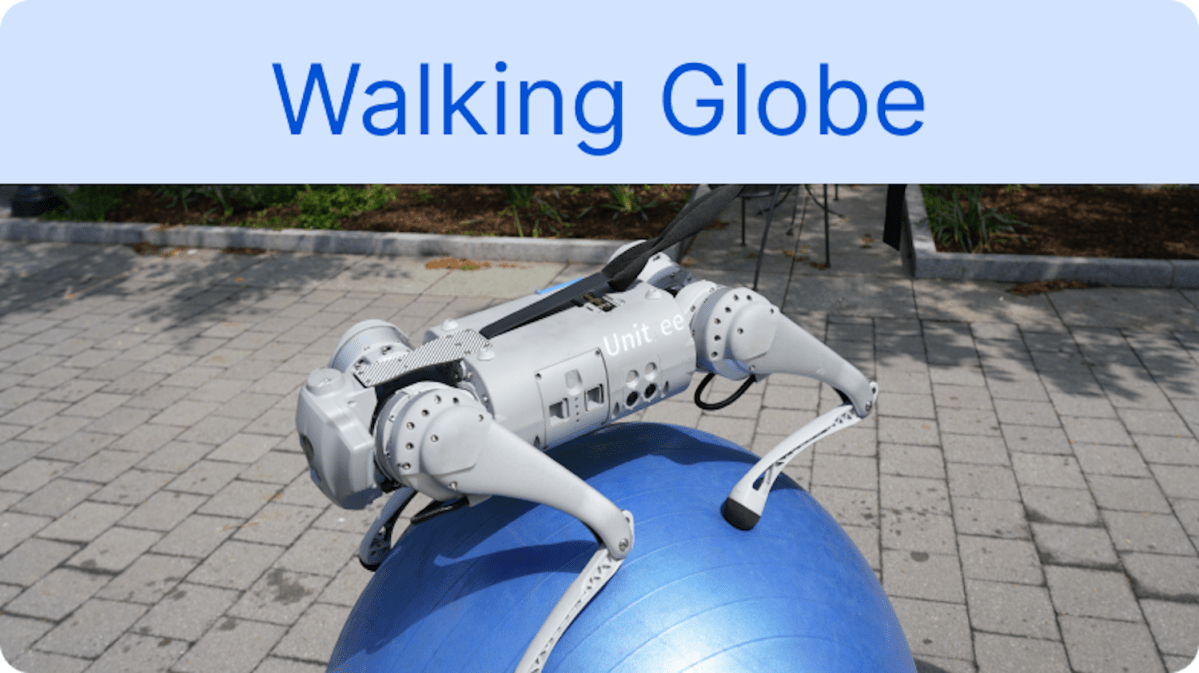

Perhaps the most intriguing application demonstrated the ability of DrEureka to train a robo-dog to balance and walk on a yoga ball successfully, showcasing its adaptability to novel tasks. The study emphasizes the pivotal role of safety instructions in task descriptions to ensure logical instructions for real-world transfer.

Overall, the researchers envision DrEureka as a game-changer in accelerating robot learning research by automating the intricate design aspects of low-level skill learning through the utilization of foundation models.

Image/Photo credit: source url