Apple Researchers Develop Innovative Techniques for Training Large Language Models

Apple researchers have recently made significant advancements in the field of artificial intelligence (AI) by developing new methodologies for training large language models utilizing both text and images. This breakthrough is poised to enhance the capabilities of AI systems while contributing to the development of future Apple products.

Research Overview

The research, outlined in a paper titled “MM1: Methods, Analysis & Insights from Multimodal LLM Pre-training,” and published on arxiv.org, emphasizes the importance of combining different types of training data and model architectures to achieve top-tier performance across various AI benchmarks.

By incorporating image-caption, interleaved image-text, and text-only data into the pre-training phase, the researchers were able to demonstrate exceptional performance in tasks such as image captioning, visual question answering, and natural language inference.

Key Findings

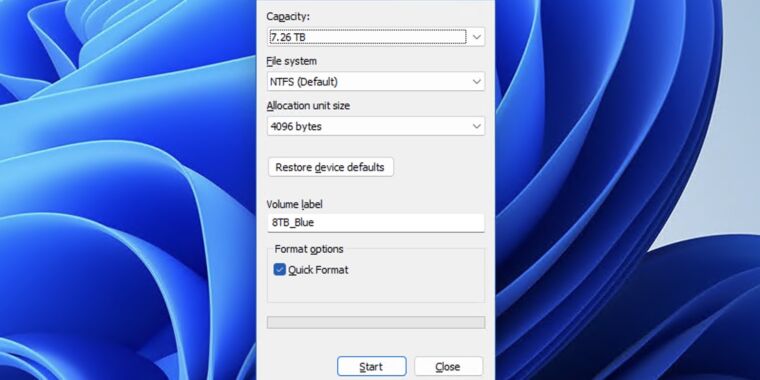

Through their study, the researchers highlighted the critical impact of the image encoder and the resolution of input images on model performance. They concluded that scaling and refining the visual components of multimodal models are essential for further advancements in this area.

Moreover, the researchers revealed that the 30 billion parameter MM1 model showcased remarkable in-context learning capabilities, enabling it to excel in multi-step reasoning tasks using few-shot prompting techniques. This capability underscores the potential of large multimodal models in addressing complex challenges that demand profound language understanding and generation.

Apple’s Strategic AI Investments

Apple’s commitment to AI development has been steadily increasing as the company aims to compete with industry leaders like Google, Microsoft, and Amazon in integrating generative AI technologies into its offerings. Reports suggest that Apple is investing approximately $1 billion annually in AI initiatives to enhance products such as Siri, Messages, and Apple Music.

The development of frameworks like “Ajax” and chatbots like “Apple GPT” underscores Apple’s dedication to leveraging AI for personalized playlist generation, code assistance, and interactive communication modalities. According to Apple CEO Tim Cook, AI and machine learning are foundational elements of the company’s product strategy, underpinning future advancements across its entire product portfolio.

The Road Ahead

Despite Apple’s historical approach of closely monitoring technological shifts before implementation, the company faces immense pressure to stay competitive in the rapidly evolving AI landscape. The MM1 research exemplifies Apple’s capabilities to drive cutting-edge innovations; however, the company must accelerate its efforts to navigate the intensifying AI race effectively.

Anticipation surrounds Apple’s upcoming Worldwide Developers Conference, where groundbreaking AI features and developer tools are anticipated to be revealed. As Apple continues to make incremental strides in AI advancements, the organization’s focus on mastering multimodal intelligence at scale signals its proactive stance in shaping the future of AI.

Image/Photo credit: source url